Over the past few years ![]() we’ve heard more about smartphone encryption than, quite frankly, most of us expected to hear in a lifetime. We learned that proper encryption can slow down even sophisticated decryption attempts if done correctly. We’ve also learned that incorrect implementations can undo most of that security.

we’ve heard more about smartphone encryption than, quite frankly, most of us expected to hear in a lifetime. We learned that proper encryption can slow down even sophisticated decryption attempts if done correctly. We’ve also learned that incorrect implementations can undo most of that security.

In other words, phone encryption is an area where details matter. For the past few weeks I’ve been looking a bit at Android Nougat’s new file-based encryption to see how well they’ve addressed some of those details in their latest release. The answer, unfortunately, is that there’s still lots of work to do. In this post I’m going to talk about a bit of that.

(As an aside: the inspiration for this post comes from Grugq, who has been loudly and angrily trying to work through these kinks to develop a secure Android phone. So credit where credit is due.)

Background: file and disk encryption

Disk encryption is much older than smartphones. Indeed, early encrypting filesystems date back at least to the early 1990s and proprietary implementations may go back before that. Even in the relatively new area of PCs operating systems, disk encryption has been a built-in feature since the early 2000s.

The typical PC disk encryption system operates as follows. At boot time you enter a password. This is fed through a key derivation function to derive a cryptographic key. If a hardware co-processor is available (e.g., a TPM), your key is further strengthened by “tangling” it with some secrets stored in the hardware. This helps to lock encryption to a particular device.

The actual encryption can be done in one of two different ways:

- Full Disk Encryption (FDE) systems (like Truecrypt, BitLocker and FileVault) encrypt disks at the level of disk sectors. This is an all-or-nothing approach, since the encryption drivers won’t necessarily have any idea what files those sectors represent. At the same time, FDE is popular — mainly because it’s extremely easy to implement.

- File-based Encryption (FBE) systems (like EncFS and eCryptFS) encrypt individual files. This approach requires changes to the filesystem itself, but has the benefit of allowing fine grained access controls where individual files are encrypted using different keys.

Most commercial PC disk encryption software has historically opted to use the full-disk encryption (FDE) approach. Mostly this is just a matter of expediency: FDE is just significantly easier to implement. But philosophically, it also reflects a particular view of what disk encryption was meant to accomplish.

In this view, encryption is an all-or-nothing proposition. Your machine is either on or off; accessible or inaccessible. As long as you make sure to have your laptop stolen only when it’s off, disk encryption will keep you perfectly safe.

So what does this have to do with Android?

Android’s early attempts at adding encryption to their phones followed the standard PC full-disk encryption paradigm. Beginning in Android 4.4 (Kitkat) through Android 6.0 (Marshmallow), Android systems shipped with a kernel device mapper called dm-crypt designed to encrypt disks at the sector level. This represented a quick and dirty way to bring encryption to Android phones, and it made sense — if you believe that phones are just very tiny PCs.

The problem is that smartphones are not PCs.

The major difference is that smartphone users are never encouraged to shut down their device. In practice this means that — after you enter a passcode once after boot — normal users spend their whole day walking around with all their cryptographic keys in RAM. Since phone batteries live for a day or more (a long time compared to laptops) encryption doesn’t really offer much to protect you against an attacker who gets their hands on your phone during this time.

Of course, users do lock their smartphones. In principle, a clever implementation could evict sensitive cryptographic keys from RAM when the device locks, then re-derive them the next time the user logs in. Unfortunately, Android doesn’t do this — for the very simple reason that Android users want their phones to actually work. Without cryptographic keys in RAM, an FDE system loses access to everything on the storage drive. In practice this turns it into a brick.

For this very excellent reason, once you boot an Android FDE phone it will never evict its cryptographic keys from RAM. And this is not good.

So what’s the alternative?

Android is not the only game in town when it comes to phone encryption. Apple, for its part, also gave this problem a lot of thought and came to a subtly different solution.

Starting with iOS 4, Apple included a “data protection” feature to encrypt all data stored a device. But unlike Android, Apple doesn’t use the full-disk encryption paradigm. Instead, they employ a file-based encryption approach that individually encrypts each file on the device.

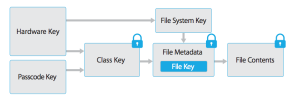

In the Apple system, the contents of each file is encrypted under a unique per-file key (metadata is encrypted separately). The file key is in turn encrypted with one of several “class keys” that are derived from the user passcode and some hardware secrets embedded in the processor.

The main advantage of the Apple approach is that instead of a single FDE key to rule them all, Apple can implement fine-grained access control for individual files. To enable this, iOS provides an API developers can use to specify which class key to use in encrypting any given file. The available “protection classes” include:

- Complete protection. Files encrypted with this class key can only be accessed when the device is powered up and unlocked. To ensure this, the class key is evicted from RAM a few seconds after the device locks.

- Protected Until First User Authentication. Files encrypted with this class key are protected until the user first logs in (after a reboot), and the key remains in memory.

- No protection. These files are accessible even when the device has been rebooted, and the user has not yet logged in.

By giving developers the option to individually protect different files, Apple made it possible to build applications that can work while the device is locked, while providing strong protection for files containing sensitive data.

Apple even created a fourth option for apps that simply need to create new encrypted files when the class key has been evicted from RAM. This class uses public key encryption to write new files. This is why you can safely take pictures even when your device is locked.

Apple’s approach isn’t perfect. What it is, however, is the obvious result of a long and careful thought process. All of which raises the following question…

Why the hell didn’t Android do this as well?

The short answer is Android is trying to. Sort of. Let me explain.

As of Android 7.0 (Nougat), Google has moved away from full-disk encryption as the primary mechanism for protecting data at rest. If you set a passcode on your device, Android N systems can be configured to support a more Apple-like approach that uses file encryption. So far so good.

The new system is called Direct Boot, so named because it addresses what Google obviously saw as fatal problem with Android FDE — namely, that FDE-protected phones are useless bricks following a reboot. The main advantage of the new model is that it allows phones to access some data even before you enter the passcode. This is enabled by providing developers with two separate “encryption contexts”:

- Credential encrypted storage. Files in this area are encrypted under the user’s passcode, and won’t be available until the user enters their passcode (once).

- Device encrypted storage. These files are not encrypted under the user’s passcode (though they may be encrypted using hardware secrets). Thus they are available after boot, even before the user enters a passcode.

Direct Boot even provides separate encryption contexts for different users on the phone — something I’m not quite sure what to do with. But sure, why not?

If Android is making all these changes, what’s the problem?

One thing you might have noticed is that where Apple had four categories of protection, Android N only has two. And it’s the two missing categories that cause the problems. These are the “complete protection” categories that allow the user to lock their device following first user authentication — and evict the keys from memory.

Of course, you might argue that Android could provide this by forcing application developers to switch back to “device encrypted storage” following a device lock. The problem with this idea is twofold. First, Android documentation and sample code is explicit that this isn’t how things work:

Moreover, a quick read of the documentation shows that even if you wanted to, there is no unambiguous way for Android to tell applications when the system has been re-locked. If keys are evicted when the device is locked, applications will unexpectedly find their file accesses returning errors. Even system applications tend to do badly when this happens.

And of course, this assumes that Android N will even try to evict keys when you lock the device. Here’s how the current filesystem encryption code handles locks:

While the above is bad, it’s important to stress that the real problem here is not really in the cryptography. The problem is that since Google is not giving developers proper guidance, the company may be locking Android into years of insecurity. Without (even a half-baked) solution to define a “complete” protection class, Android app developers can’t build their apps correctly to support the idea that devices can lock. Even if Android O gets around to implementing key eviction, the existing legacy app base won’t be able to handle it — since this will break a million apps that have implemented their security according to Android’s current recommendations.

In short: this is a thing you get right from the start, or you don’t do at all. It looks like — for the moment — Android isn’t getting it right.

Are keys that easy to steal?

Of course it’s reasonable to ask whether it’s having keys in RAM is that big of concern in the first place. Can these keys actually be accessed?

The answer to that question is a bit complicated. First, if you’re up against somebody with a hardware lab and forensic expertise, the answer is almost certainly “yes”. Once you’ve entered your passcode and derived the keys, they aren’t stored in some magically secure part of the phone. People with the ability to access RAM or the bus lines of the device can potentially nick them.

But that’s a lot of work. From a software perspective, it’s even worse. A software attack would require a way to get past the phone’s lockscreen in order to get running code on the device. In older (pre-N) versions of Android the attacker might need to then escalate privileges to get access to Kernel memory. Remarkably, Android N doesn’t even store its disk keys in the Kernel — instead they’re held by the “vold” daemon, which runs as user “root” in userspace. This doesn’t make exploits trivial, but it certainly isn’t the best way to handle things.

Of course, all of this is mostly irrelevant. The main point is that if the keys are loaded you don’t need to steal them. If you have a way to get past the lockscreen, you can just access files on the disk.

What about hardware?

Although a bit of a tangent, it’s worth noting that many high-end Android phones use some sort of trusted hardware to enable encryption. The most common approach is to use a trusted execution environment (TEE) running with ARM TrustZone.

This definitely solves a problem. Unfortunately it’s not quite the same problem as discussed above. ARM TrustZone — when it works correctly, which is not guaranteed — forces attackers to derive their encryption keys on the device itself, which should make offline dictionary attacks on the password much harder. In some cases, this hardware can be used to cache the keys and reveal them only when you input a biometric such as a fingerprint.

The problem here is that in Android N, this only helps you at the time the keys are being initially derived. Once that happens (i.e., following your first login), the hardware doesn’t appear to do much. The resulting derived keys seem to live forever in normal userspace RAM. While it’s possible that specific phones (e.g., Google’s Pixel, or Samsung devices) implement additional countermeasures, on stock Android N phones hardware doesn’t save you.

So what does it all mean?

How you feel about this depends on whether you’re a “glass half full” or “glass half empty” kind of person.

If you’re an optimistic type, you’ll point out that Android is clearly moving in the right direction. And while there’s a lot of work still to be done, even a half-baked implementation of file-based implementation is better than the last generation of dumb FDE Android encryption. Also: you probably also think clowns are nice.

On the other hand, you might notice that this is a pretty goddamn low standard. In other words, in 2016 Android is still struggling to deploy encryption that achieves (lock screen) security that Apple figured out six years ago. And they’re not even getting it right. That doesn’t bode well for the long term security of Android users.

And that’s a shame, because as many have pointed out, the users who rely on Android phones are disproportionately poorer and more at-risk. By treating encryption as a relatively low priority, Google is basically telling these people that they shouldn’t get the same protections as other users. This may keep the FBI off Google’s backs, but in the long term it’s bad judgement on Google’s part.

https://developer.android.com/training/articles/keystore.html#UserAuthentication

Android apps can do something similar, if you hack things together yourself.

I don’t know if the app can ask to relock the keystore, but if it can then you could use the intent system to be notified when the device is locked, to then ask to relock the keystore.

Apps can use time-limited keystore keys to encrypt their data, setting whatever time limits they like. So apps can provide really good data security, but they have to do much of the work themselves.

Thank you for this analysis.

My problem with your article is that you’re making a lot of assumptions for things that you have not verified. You claim that once the user has authenticated the derived keys “seem” to live in userspace RAM. Do you have proof of this or is this just a guess on your part?

It’s pretty well documented in the code. The code even specifies that this is not ideal. https://android.googlesource.com/platform/system/vold/+/master/

I want my phone to be a useless brick until I enter the pass phrase, thank you very much.

You missed the most important reason to choose FDE: It’s simple and it always works, without support from the apps. It works even if the apps deliberately try to screw it up. And, yes, it is effective in many practical situations. First, you often have a chance to turn the phone off before it’s attacked. Second, most casual attackers can’t get past the screen lock without rebooting the phone… and thereby wiping the FDE keys.

File encryption, on the other hand, is complicated. As soon as you force app developers to understand the crypto, or to make decisions about which “class” their data should be in, you’re guaranteeing that most of them will get it wrong.

They’ll put data in the wrong class. They’ll leak information into one class that can be used to derive data from another class. They’ll compromise critical protection to enable trivial pointless “user experience” masturbation, as soon as the marketing department whines that X or Y has to be available from the lock screen. And that’s before we get to the question of simple bugs.

Not to mention the fact that the *system* developers will probably screw up file encryption. All that key management is itself really complicated to get right, starting with really erasing the keys when they’re not supposed to be resident, and going from there.

So FDE should be a non-negotiable baseline. If you want to make the screen lock more meaningful by adding more layers of file encryption on top of that, then I’m totally with you. But it was a huge step backwards for Android to take away the all-encompassing basic protection.

And, by the way, I want that FDE pass phrase to be a helluva lot more complicated than the one I use to unlock the phone once it’s booted.

As you point out, TrustZone and similar are not safe (and the reason for that is that they’re complicated… like file encryption). Even true hardware keying doesn’t always work; chips are more complicated than people think, and chips can be invaded. The only really safe approach is to use a significant amount of keying entropy that comes from entirely outside the device, which means from the user. That means a long pass phrase that you *cannot* realistically use for unlocks. So your “always on” user experience is never going to be truly trustworthy.

*this.

I appreciate the author’s work and I take this very seriously. However, when this phone is off, there is an intense passphrase in order to un-brick it. This is by far the best possible encryption: the device *must* be a brick when restarted. Go ahead and put all the other security bells and whistles on top of this (even if it ends up being encryption layered on top of encryption in some cases) but do not ever take away the fde.

How do you plan to get phone calls, or do anything really, if your phone is a brick when locked?

I want the phone to reliably be a brick when it’s *off*.

Once that’s been achieved, I’m very happy to talk about additionally hardening it when it’s *locked*. But, precisely because the phone does have to do a lot of stuff when it’s locked, that protection will always be relatively unreliable. On the other hand, when it’s completely off, it doesn’t have to do anything at all, and that means that you can get a lot better assurance with relative ease.

What I’m objecting to is weakening the protection of the completely turned off phone, not trying to add more protection for the locked one. They didn’t put file encryption on top of FDE; they *eliminated* FDE in favor of file encryption.

I agree with Sok Puppette. While I do see some benefits in this file-based strategy, I fear that the benefits are outweighed by leaving these decisions of what should and shouldn’t be encrypted up to individual developers. Too many will not invest the time to sufficiently understand what is at stake and, consequently, my device will end up less secure.

I prefer the security and simplicity of FDE.

I also agree that the decryption password required at boot time should be long and complicated, while unlocking the screen should be a less cumbersome process. Since Android does not offer this option, I use a workaround. After setting a PIN for unlocking the screen, I initiate the encryption from the command line, using a long alphanumeric password. The result is just what I desired: a long complicated password at boot, with a numeric PIN to unlock the screen.

The only drawback is that, should my device reboot in the night, its alarm will not function to wake me. However, I wear a watch, which provides a backup alarm – and so, this consequence does not greatly trouble me.

By default all app data is credential-encrypted, meaning encrypted with a key that is not accessible until the user enters their passphrase. Apps can take steps to move their data to the “device-encrypted” bucket, which is available as soon as the device is booted (it’s still encrypted, with a key derived in TrustZone, but the derivation doesn’t depend on a user password). So while apps can do the wrong thing they have to go out of their way to do it, and the way they do it is — by design — somewhat inconvenient for them.

It makes sense for authors of things like alarm apps to move some of their data to device-encrypted storage, so that if your phone reboots in the middle of the night your alarm can still go off in the morning, but these are the exceptions.

Is your claim then that the innumerable iOS apps released since v4 are compromising device security due to poor developer choices? While I’m not saying you’re wrong about that possibility (probability), obviously there must be something that can be done to minimize this problem or Apple would probably have had to deal with the problem much sooner than the recent FBI $1MM purchase.

No, I am not making that claim at all.

Security is not simple. A single layer of added complexity adds an exponential amount of possible attack vectors and vulnerabilities. It can be done right, certainly. But I promise, this will take years and years of issues, exploits, 0-days and so on. And fine. I accept this *if* it is built on top of an FDE encrypted “brick” surface.

I find comfort knowing that if I power my phone off, or an attacker must or accidentally powers it off before cryogenically extracting the keys from memory, that it is utterly useless to anyone. I’m not naïve enough to suggest this will work against a well-funded state actor, but against most LEOs and opportunistic crooks: yes.

The evidence suggests that Apple has done a good job. But with the fractured and rather chaotic state of Android, I truly believe it will take at least 10 years of pain, risk, and struggle before they can pull something like that off. So, I’ll stick with my brick.

Sorry, but I do not agree your comment about TrustZone and similar are not safe. Mainly if your argument is “they are complicated”. What do you mean by that?

As Gal Beniamini mention in his blog (bits-please), one option could be use hardware encryption directly, although this implies changes on how things works right now. There is also the Apple TEE, Secure Enclave, which is on a separate processor having a really isolate TEE (it use TrustZone) and offers a quite good encryption system. Never is a good security policy trust on the user, I think.

The problem is not on TrustZone (although is not a perfect solution), it is in how hardware manufacturers implement TrustZone. ARM does not develop (physically) the technology only sells the IP, therefore the SoC developer is responsible for following the security guidelines of ARM. One thing it is important to know is that TrustZone solution comprises several components (see TrustZone White Paper), and most of the cases (I would say always) the SoC does not have all. So, hardware protection is partial. This was pointed out in “Trustworthy Execution on Mobile Devices”, both the paper (2011) and the book (2014).

Another thing I think is not correct is Matthew Green’s comment about TZ: ” when it works correctly”. Most of the vulnerabilites on that blog (I think all) are related with the TEE OS. TrustZone is HW and there is a higher layer in SW where different vulnerabilities have been found (integer overflows, improper memory validations…) which is not ARM TrustZone. The developer of that OS does not neccesarely have to have any relation with the SoC developer.

As I said before, I know TZ is not perfect, I know TEE as technology has to improve (important: TEE is not TZ, TZ can be use inside a TEE solution) but I think is an improvement compare with other alternatives.

TrustZone is not safe because the code running inside it is always exploitable. The ACTUAL code running in ACTUAL deployed TrustZone systems has been exploited. The original post linked to an example.

Once you get control of what’s inside the enclave, you can extract any secrets that are supposedly meant to stay in there.

It doesn’t matter if you can *theoretically* write non-exploitable code to run inside something like that. The point is that it won’t happen. Modern software engineering practice is not capable of reliably producing that result. Hardware probably isn’t either. Whenever you say “The problem is that company X didn’t do Y”, you’re assuming that there’s a possible world in which they all get it right. There isn’t.

It’s not even COMPLETELY safe to type in a big pass phrase at every boot, because there’s a chance that something will end up sticking it in nonvolatile storage somewhere, where it can be retrieved later. But that’s much less likely than somebody finding an exploit for the code that’s supposed to be managing a deliberately persistent key. And if you design things right, you can force them to do *both*.

You should’ve called it “Android N Cryption”

Fantastic overview of a complex problem. Thanks very much for elucidating the flaws in what I’d thought was a done deal.

It is time for all of these devices to support OPAL protected Flash memory where the encryption happens at the memory controller one locked there is no recovery and when in use the keys can not be accessed with software or hardware level access you have to break the chips. OPAL compliant SED drives have been available in PCs for over 8 years. The root keys in hardware are bound to the Firmware image signature in the drive so even patching the drive software zeros the drive. If you want real encryption you need hardware. IOS is only as secure as the last OS patch. a targeted phone can be patched and then the keys extracted.

For the record, I think Apple-style encryption was done long before Apple. I remember a LLNL Technical Report that described a Cray Operating system doing something similar, but sorry I don’t have a reference.

Thank you for the analysis.

Though it isn’t releant to this discussion, just for the record, I think Apple-style encryption was done long before Apple. I remember a LLNL Technical Report that described a Cray Operating system doing something similar, but sorry I don’t have a reference.

Are pictures on iphone under full protection ? Because I don’t understand how simple lock screen bypass you can find on youtube can be used to display the pictures of the phone without entering the pass code ?

MG didn’t explain 4th class key: Protected Unless Open. This behavior is achieved by using asymmetric cryptography using Curve25519. The usual per-file key is protected by a key derived using Diffie-Hellman Key Agreement .

The ephemeral public key for the agreement is stored alongside the wrapped per-file key. When the file is closed, the per-file key is wiped from memory.To open the file again, the shared secret is re-created using the Protected Unless Open class’s private key and the file’s ephemeral public key, which are used to unwrap the per-file key that is then used to decrypt the file. Shared secret are used to restore per-file encryption key. In such way are encrypted e-mail attachments downloaded in background also when iOS device is locked.

So I only worked on the ext4 encryption feature in the Linux kernel which was used to implement Android FBE, and so I’m not an expert on vold, but there shouldn’t be a reason why vold needs to hang on to the key. Vold is responsible for pushing the keys into the kernel, but what ext4 uses to do the per-file encryption is stored on a kernel keyring, *not* in userspace. So vold should be destroying the key after it loads it into memory — it certainly can’t use the key for anything useful.

As far as being able to remove the keys while the phone is locked — I can’t comment on future product directions, but it’s fair to say that the people working on the upper layers of the Android security system aren’t idiots, and the limitations of what was landed in the Android N release was known to them. It is no worse than what we had in older versions of Android (with FDE, we were using dm-crypt, and the keys were living in the kernel as well), and while it doesn’t help improve security with respect to the “evil maid” attack, it does significantly improve the user experience.

I agree that there is certainly room to improve, and note that future changes will require applications to make changes in how they access files, which does make it a much trickier change to introduce into the ecosystem without breaking backwards compatibility with existing applications.

As far as why different keys are being used for different profiles — this makes it much easier to securely remove a corporate, “work” profile from a phone without needing to do a secure erase on the entire flash device — either because an employee is leaving their existing employer and there is corp data on a BYOD phone/tablet, or because the phone has been lost, and corporate security policy about how quickly corp data should be zapped from the phone might be different from what the user might want to exercise when they have temporarily misplaced the phone. (e.g., you might have a different tradeoff of losing unbacked-up data from your personal files versus their getting compromised while you hope that someone turns in your phone to lost+found than your company’s security policies might require.)

It does seem worse though. If I understand it correctly then “Credential encrypted storage” is from a security perspective equivalent to the FDE (i.e. under all conditions when a FDE file would be readable, it would still be readable), and “Device encrypted storage” is worse than FDE, as it remains readable to an adversary even if the device is turned off. I.e. if app developers accidentally or deliberately put private data (and even e.g. alarm times could potentially reveal private information) into the device encrypted class, it weakens security compared to FDE. It also seems like a big wasted opportunity to not include a “Complete protection” class to help protect against exploitation of all software level (e.g. lock screen bypass and/or privilege escalation) bugs if a device falls into the hands of an adversary when turned on, but locked.

If this is the case why can I, or anyone knowing the siri lockscreen trick view your media and contacts? Is that in the always unlocked class? This seems like valuable data to me?

I think this article highlights what we all know : encryption is hard. Then waxes on about Apple “getting it right”. While I agree that they have done a nice job both companies have a long way to go.

From Apples Security Guide it states:

” Calendar (excluding attachments), Contacts, Reminders,

Notes, Messages, and Photos implement Protected Until First User Authentication.

User-installed apps that do not opt-in to a specific Data Protection class receive

Protected Until First User Authentication by default. ”

I could imagine it has something to do with e.g. wanting to do background backups into the cloud, but it does defeat this wonderful possibility to defend against these kind of lock screen or other software bugs. It also begs the question of how often the “complete protection” class actually gets used?

How often the “complete protection” class actually gets used?

——————————–

From iOS 9.3+ Security Guide:

Complete protection: Home sharing password, iTunes backup, Safari passwords, Safari Bookmarks – all accessible after device unlocking.

Unless I’m missing something, it looks like this article isn’t showing up on the sites RSS feed. Did the site re-design break something? 😦

Where is the Moto z play Android N updated

Considering the number of major exploits that come out of android and iOS and the horrible state of their app stores, if you store sensitive information on your phone, you’re taking a pretty big risk. These mobile OS’s are not mature, and they are not ready for sensitive information in any way. I consider them toys, not real operating systems. Windows 10 on the other hand has posted amazingly low exploit numbers. Just go to an app store and look at the developers of your favorite apps, chances are you have no idea who these people are and if you think Google and Apple are really protecting you with their app reviews, you’re kidding yourself. The apps stores are full of data mining and malware, that should be a pretty big red flag to anyone putting sensitive information on android or iOS. Until the exploit numbers go down on those operating systems, they are not truly ready for sensitive information. Personally I think Windows 10 is quickly becoming the best mobile OS and it will likely become the more secure mobile OS too.

If your still running flash, but worried about device encryption, you have your priorities all mixed up. If your hiding information from the government, STOP, remove said information because that’s the only way your safe. Honestly you not supposed to be able to hide endless piles of information from your own government, that was never a thing. If your feel that threatened by your government, do yourself a favor and delete all that info. Paper is vastly more secure than any consumer operating system. If you do have sensitive information, you put it on a special device that you ONLY use for sensitive information and you lock that device down, but realistically you cannot lock down Android or iOS to be reliably secure, they are not proven yet.

What are your thoughts on Copperhead OS? Does it fix these issues? It’s based off of Android, but is secure.